Pytorch Tutorials

- -

1. tensor

🔸 랜덤한 값을 가지는 텐서 생성

- torch.rand() : 0과 1 사이의 숫자를 균등하게 생성

- torch.rand_like() : 사이즈를 튜플로 입력하지 않고 기존의 텐서로 정의

- torch.randn() : 평균이 0이고 표준편차가 1인 가우시안 정규분포를 이용해 생성

- torch.randn_like() : 사이즈를 튜플로 입력하지 않고 기존의 텐서로 정의

- torch.randint() : 주어진 범위 내의 정수를 균등하게 생성, 자료형은 torch.float32

- torch.randint_like() : 사이즈를 튜플로 입력하지 않고 기존의 텐서로 정의

- torch.randperm() : 주어진 범위 내의 정수를 랜덤하게 생성

🔸 특정한 값을 가지는 텐서 생성

- torch.arange() : 주어진 범위 내의 정수를 순서대로 생성

- torch.ones() : 주어진 사이즈의 1로 이루어진 텐서 생성

- torch.zeros() : 주어진 사이즈의 0으로 이루어진 텐서 생성

- torch.ones_like() : 사이즈를 튜플로 입력하지 않고 기존의 텐서로 정의

- torch.zeros_like() : 사이즈를 튜플로 입력하지 않고 기존의 텐서로 정의

- torch.linspace() : 시작점과 끝점을 주어진 갯수만큼 균등하게 나눈 간격점을 행벡터로 출력

- torch.logspace() : 시작점과 끝점을 주어진 갯수만큼 로그간격으로 나눈 간격점을 행벡터로 출력

2. Dataset과 DataLoader

2.1 Dataset

PyTorch는 torch.utils.data.DataLoader 와 torch.utils.data.Dataset 의 두 가지 데이터 기본 요소를 제공하여 미리 준비해된(pre-loaded) 데이터셋 뿐만 아니라 가지고 있는 데이터를 사용할 수 있도록 합니다.

Dataset 은 샘플과 정답(label)을 저장하고,

DataLoader 는 Dataset 을 샘플에 쉽게 접근할 수 있도록 순회 가능한 객체(iterable)로 감쌉니다.

2.1.1 라이브러리로 부터 Dataset 로드

import torch

from torchvision import datasets

from torchvision.transforms import ToTensor, Lambda

import matplotlib.pyplot as plttorchvision은 datasets, models, transforms로 구성되어 있다.

- torchvision.datasets에는 MNIST, Fashion-MNIST등 다양한 데이터셋 제공

- torchvision.models에는 Alesnet, VGG, ResNet등의 모델 제공

- torchvision.transforms는 다양한 이미지 변환 기능들을 제공

- torchvision.transform.ToTensor은 PIL Image나 NumPy ndarray 를 FloatTensor 로 변환하고, 이미지의 픽셀의 크기(intensity) 값을 [0., 1.] 범위로 비례하여 조정(scale)

- from torchvision.transforms.Lambda는 사용자 정의 람다(lambda) 함수를 적용한다.

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

target_transform=Lambda(lambda y: torch.zeros(10, dtype=torch.float).scatter_(0, torch.tensor(y), value=1))

)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)다음 매개변수들을 사용하여 FashionMNIST 데이터셋을 불러오는 과정이다.

root 는 학습/테스트 데이터가 저장되는 경로를 지정

train 은 학습용 또는 테스트용 데이터셋 여부를 지정

download=True 는 root에 데이터가 없는 경우 인터넷에서 다운로드

transform 과 target_transform 은 특징(feature)과 정답(label) 변형(transform)을 지정

Lambda의 lambda는 여기에서는 정수를 원-핫으로 부호화된 텐서로 바꾸는 함수를 정의한다. 이 함수는 먼저 (데이터셋 정답의 개수인) 크기 10짜리 영 텐서(zero tensor)를 만들고, scatter_ 를 호출하여 주어진 정답 y 에 해당하는 인덱스에 value=1 을 할당한다.

2.1.2 Dataset을 순회하여 시각화

Dataset 에 리스트(list)처럼 직접 접근(index)할 수 있다.

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle Boot",

}

figure = plt.figure(figsize=(8,8))

cols, rows= 3,3

for i in range(1, cols*rows+1):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.title(labels_map[label])

plt.imshow(img.squeeze(), cmap='gray')

plt.show()

2.2 파일에서 사용자 정의 Dataset 생성

사용자 정의 Dataset 클래스는 반드시 3개 함수를 구현해야 한다

: __init__, __len__, and __getitem__

from torch.utils.data import Dataset

import os

import pandas as pd

from torchvision.io import read_image

class CustomImageDataset(Dataset):

def __init__(self, annotation_file, img_dir, trnasform=None, target_transform=None):

self.img_labels = pd.read_csv(annotation_file, names=['file_name', 'label'])

self.img_dir = img_dir

self.transform = transform

self.target_transform = target_transform

def __len__(self):

return len(self.img_labels)

def __getitem__(self, idx):

img_path = os.path.join(self.img_dir, self.img_labels,iloc[idx, 0])

image = read_image(img_path)

label = self.img_labels.iloc[idx,1]

if self.transform:

image = self.transform(image)

if self.target_transform:

label = self.target_transfrom(label)

sample = {"image": image, "label": label}

return sample- __init__

__init__ 함수는 Dataset 객체가 생성(instantiate)될 때 한 번만 실행된다.

여기서는 이미지와 주석 파일(annotation_file)이 포함된 디렉토리와 변형(transform)을 초기화한다.

MNIST를 파일에서 불러올 경우 MNIST.csv는 다음과 같다.

tshirt1.jpg, 0

tshirt2.jpg, 0

......

ankleboot999.jpg, 9

def __len__(self):

return len(self.img_labels)- __len__

__len__ 함수는 데이터셋의 샘플 개수를 반환한다.

def __getitem__(self, idx):

img_path = os.path.join(self.img_dir, self.img_labels,iloc[idx, 0])

image = read_image(img_path)

label = self.img_labels.iloc[idx,1]

if self.transform:

image = self.transform(image)

if self.target_transform:

label = self.target_transfrom(label)

sample = {"image": image, "label": label}

return sample- __getitem__

__getitem__ 함수는 주어진 인덱스 idx 에 해당하는 샘플을 데이터셋에서 불러오고 반환한다.

인덱스를 기반으로, 디스크에서 이미지의 위치를 식별하고,

read_image 를 사용하여 이미지를 텐서로 변환하고,

self.img_labels 의 csv 데이터로부터 해당하는 정답(label)을 가져오고,

(해당하는 경우) 변형(transform) 함수들을 호출한 뒤,

텐서 이미지와 라벨을 Python 사전(dict)형으로 반환한다.

2.2.1 예시

샘플 데이터

train_images = np.random.randint(256, size=(20,32,32,3))

train_labels = np.random.randint(2, size=(20,1))

## tensor로 바꿔주기 전에 보통 전처리 모듈을 불러와 전처리 진행

# import preprocessing

# train_images, train_labels = preprocessing(train_images, train_labels)

print(train_images,shape, train_labels.shape)

>>> (20, 32, 32, 3) (20, 1)custom dataset

class CustomDataset(Dataset):

def __init__(self, x_data, y_data, transform=None):

self.x_data = x_data

self.y_data = y_data

self.transform = transform

self.len = len(y_data)

def __getitem__(self, index):

sample = self.x_data[index], self.y_data[index]

if self.transform:

sample = self.transform(sample)

return sample

def __len__(self):

return self.lentransform에 대해 custom class를 만들기

class ToTensor:

def __call__(self, sample):

inputs, labels = sample

inputs = torch.FloatTensor(inputs)

inputs = inputs.permute(2,0,1)

return inputs, torch.LongTensor(labels)

class LinearTensor:

def __init__(self, slope, bias=0):

self.slope = slope

self.bias = bias

def __call__(self, sample):

inputs, labels = sample

inputs = self.slope * inputs + self.bias

return inputs, labels만든 class 사용하기

trans = tr.Compose([ToTensor(), LinearTensor(2,5)])

dataset = CustomDataset(train_images, train_labels, transform=trans)

train_loader = DataLoader(datset, batch_size, shuffle=True)custom class인 ToTensor()가 아닌 torchvision의 tr.ToTensor()을 쓰고 싶은 경우

class MyTransform:

def __call__(self, sample):

inputs, labels = sample

inputs = torch.FloatTensor(inputs)

inputs = inputs.permute(2,0,1)

labels = torch.FloatTensor(labels)

transf = tr.Compose([tr.ToPILImabe(), tr.Resize(128), tr.ToTensor(), tr.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5))]

final_output = transf(inputs)

return final_output, labels2.3 같은 클래스 별 폴더 이미지 데이터를 이용할 때

./class/cat ./class/lion 같이 폴더가 되어 있을 때

torchvision.datasets.ImageFolder 데이터 전체를 불러오면서 레이블도 자동으로 매겨지면서 전처리까지 가능하다.

transf = tr.Compose([tr.Resize(16), tr.ToTensor()])

train_data = torchvision.datasets.ImageFolder(root='./class', transform=transf)

train_dataloader = DataLoader(train_data, batch_size=10, shuffle=True, num_workers=2)3. DataLoader

3.1 DataLodaer로 학습용 데이터 준비

Dataset은 데이터셋의 feature을 가져오고 하나의 샘플에 label을 지정하는 일을 한 번에 한다.

보통 모델을 학습할 때, 일반적으로 샘플들을 “미니배치(minibatch)”로 전달하고, 매 에폭(epoch)마다 데이터를 다시 섞어서 과적합(overfit)을 막고, Python의 multiprocessing 을 사용하여 데이터 검색 속도를 높이려고 한다.

DataLoader 는 간단한 API로 이러한 복잡한 과정들을 추상화한 순회 가능한 객체(iterable)이다.

from torch.utils.data import DataLoader

train_dataloader = DataLoader(training_data, batch_size=64, shuffle=True)

test_dataloader = DataLoader(test_data, batch_size=64, shuffle=True)3.2 DataLoader을 통해 순회

DataLoader 에 데이터셋을 불러온 뒤에는 필요에 따라 데이터셋을 순회(iterate)할 수 있다.

아래의 각 순회(iter)는 (각각 batch_size=64 의 특징(feature)과 정답(label)을 포함하는) train_features 와 train_labels 의 묶음(batch)을 반환한다.

shuffle=True 로 지정했으므로, 모든 배치를 순회한 뒤 데이터가 섞인다.

파이썬 내장함수 next( ), iter( )

iter(호출가능한객체, 반복을끝낼값)

next(반복가능한객체, 기본값)>> it = iter(range(3)) >> next(it, 10) 0 >> next(it, 10) 1 >> next(it, 10) 2 >> next(it, 10) 10

train_features, train_labels = next(iter(train_dataloader))

print(f"Feature batch shape: {train_features.size()}")

print(f"Labels batch shape: {train_labels.size()}")

print(f"Label: {label}")

img = train_features[0].squeeze()

label = train_labels[0]

plt.imshow(img, cmap="gray")

plt.show()

print(f"Label: {label}")Feature batch shape: torch.Size([64, 1, 28, 28])

Labels batch shape: torch.Size([64])

Label : tensor([0., 0., 0., 0., 0., 0., 0., 1., 0., 0.])

4. 학습 환경 설정

torch.cuda 를 사용할 수 있는지 확인하고 그렇지 않으면 CPU를 계속 사용한다.

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(f'Using {device} device')5. 신경망 모델 구축

신경망 모델은 연산을 수행하는 계층(layer)/모듈(module)로 구성되어 있다.

torch.nn는 신경망을 구성하는데 필요한 모든 구성 요소를 제공합니다.

PyTorch의 모든 모듈은 nn.Module 의 하위 클래스(subclass)이다.

5.1 신경망 모델 클래스 정의

신경망 모델을 nn.Module 의 하위클래스로 정의하고,

init 에서 신경망 계층들을 초기화한다.

nn.Module 을 상속받은 모든 클래스는 forward 메소드에 입력 데이터에 대한 연산들을 구현한다.

from torch import nn

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logitsNeuralNetwork 의 인스턴스(instance)를 생성하고 이를 device 로 이동시킨다.

model = NeuralNetwork().to(device)

print(model)NeuralNetwork( (flatten): Flatten(start_dim=1, end_dim=-1) (linear_relu_stack): Sequential( (0): Linear(in_features=784, out_features=512, bias=True) (1): ReLU() (2): Linear(in_features=512, out_features=512, bias=True) (3): ReLU() (4): Linear(in_features=512, out_features=10, bias=True) ) )

모델에 입력을 호출하면 각 분류(class)에 대한 원시(raw) 예측값이 있는 10-차원 텐서가 반환된다.

원시 예측값을 nn.Softmax 모듈의 인스턴스에 통과시켜 예측 확률을 얻는다.

X = torch.rand(1, 28, 28, device=device)

logits = model(X)

pred_probab = nn.Softmax(dim=1)(logits)

y_pred = pred_probab.argmax(1)

print(f"Predicted class: {y_pred}")

>>> Predicted class: tensor([6])5.1.1 Layer

Layer들에서 어떤 일이 발생하는지 확인해 보기 위해 28X28크기의 이미지 3개로 구성된 미니배치를 이용하겠다.

input_image = torch.rand(3,28,28)

print(input_image.size())

>>> torch.Size([3, 28, 28])5.1.2 nn.Flatten

Flatten은 계층을 초기화하여 각 28x28의 2D 이미지를 784 픽셀 값을 갖는 연속된 배열로 변환한다. (dim=0의 미니배치 차원은 유지)

flatten = nn.Flatten()

flat_image = flatten(input_image)

print(flat_image.size())

>>> torch.Size([3, 784])5.1.3 nn.Linear

Linear은 weight와 bias를 이용해 입력에 선형 변환을 적용하는 모듈이다.

layer1 = nn.Linear(in_features=28*28, out_features=20)

hidden1 = layer1(flat_image)

print(hidden1.size())

>>> torch.Size([3, 20])5.1.4 nn.ReLU

Activation function은 선형 상태에 비선형성을 도입하여 신경망이 다양한 현상을 학습할 수 있도록 한다.

hidden1 = nn.ReLU()(hidden1)5.1.5 nn.Sequential

nn.Sequential 은 순서를 갖는 모듈의 컨테이너이다.

sequential container를 사용하여 아래의 seq_modules 와 같은 신경망을 빠르게 만들 수 있다.

seq_modules = nn.Sequential(

flatten,

layer1,

nn.ReLU(),

nn.Linear(20, 10)

)

input_image = torch.rand(3,28,28)

logits = seq_modules(input_image)5.1.6 nn.Softmax

신경망의 마지막 선형 계층은 [-infty, infty] 범위의 값(raw value)인 logits를 반환한다.

nn.Softmax 모듈은 logits는 모델의 각 분류(class)에 대한 예측 확률을 나타내도록 [0, 1] 범위로 비례하여 조정한다.

dim 매개변수는 값의 합이 1이 되는 차원을 나타낸다.

softmax = nn.Softmax(dim=1)

pred_probab = softmax(logits)logits

>>> tensor([[-0.1024, -0.0443, -0.0061, -0.0646, 0.0962, -0.0137, 0.0917, -0.1101,

-0.0819, 0.0465]], grad_fn=<AddmmBackward0>)

pred_probab

>>> tensor([[0.0917, 0.0972, 0.1010, 0.0953, 0.1119, 0.1003, 0.1114, 0.0910, 0.0936,

0.1065]], grad_fn=<SoftmaxBackward0>)6. Autograd

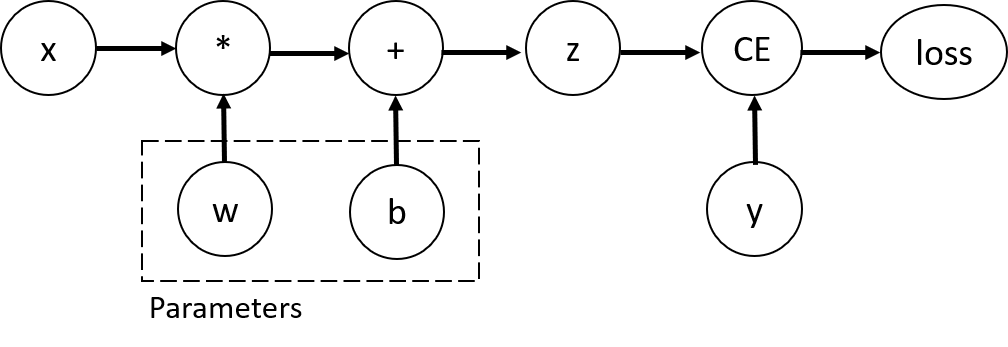

신경망의 핵심은 backpropagation이다.

forward propagation은 다음과 같이 진행된다.

위 신경망에서 Weight와 bias가 최적화를 해야하는 매개변수이다.

backpropagation은 Weight와 bias를 Loss에 대한 weight와 bias의 derivative를 이용해 update해 나가는 것이다.

그렇게 하기 위해서는 변수들에 대한 loss의 derivative를 계산하고 chain rule을 이용해 최종적으로 ∂loss/∂w와 ∂loss/∂b를 구해야 한다.

Pytorch에서는 이러한 gradient의 계산을 자동 지원하는 torch.autograd가 존재한다.

사용하는 방법은 아래 코드의 backpropagation 부분에서 확인할 수 있다.

7. 모델 Hyperparameter 최적화, 학습, 검증, 테스트

모델과 데이터가 준비된 후에는, 데이터에 매개변수를 최적화하여 모델을 학습하고, 검증하고, 테스트할 차례이다.

모델을 학습하는 과정은 각 epoch마다 output을 예측하고, predict와 정답 사이의 오류(손실(loss))를 계산하고, 매개변수에 대한 오류의 도함수(derivative)를 수집한 뒤, 경사하강법을 사용하여 이 파라미터들을 최적화(optimize)하는 것이다.

7.1 Hyperparameter

Hyperparameter는 모델 최적화 과정을 제어할 수 있는 조절 가능한 매개변수이다.

서로 다른 하이퍼파라매터 값은 모델 학습과 수렴율(convergence rate)에 영향을 미칠 수 있다.

epoch - 데이터셋을 반복하는 횟수

batch size - 매개변수가 갱신되기 전 신경망을 통해 전파된 데이터 샘플의 수

learning rate - 각 배치/에폭에서 모델의 매개변수를 조절하는 비율. 값이 작을수록 학습 속도가 느려지고, 값이 크면 학습 중 예측할 수 없는 동작이 발생할 수 있다.

7.2 Loss Function

predict와 실제 값 사이의 오차를 측정하며, 학습 중에 이 값을 최소화하고자 한다.

주어진 데이터 샘플을 입력으로 계산한 예측과 정답(label)을 비교하여 손실(loss)을 계산한다.

일반적인 손실함수에는 회귀 문제에 사용하는 nn.MSELoss(평균 제곱 오차(MSE; Mean Square Error))나 분류(classification)에 사용하는 nn.LogSoftmax와 nn.CrossEntropyLoss 등이 있다.

7.3 Optimizer

각 학습 단계에서 모델의 오류를 줄이기 위해 모델 매개변수를 조정하는 과정이다.

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

학습 단계(loop)에서 최적화는 세단계로 이뤄진다.

- optimizer.zero_grad()를 호출하여 모델 매개변수의 변화도를 재설정한다.

기본적으로 gradient는 더해지기(add up) 때문에 중복 계산을 막기 위해 반복할 때마다 명시적으로 0으로 설정한다.

- loss.backwards()를 호출하여 prediction loss를 backpropagate한다. PyTorch는 각 매개변수에 대한 손실의 변화도를 저장한다.

- 변화도를 계산한 뒤에는 optimizer.step()을 호출하여 backpropagation 단계에서 수집된 변화도로 매개변수를 조정합니다.

7.4 train_loop / test_loop

def train_loop(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

for batch, (X, y) in enumerate(dataloader):

# forward propagation

pred = model(X)

loss = loss_fn(pred, y)

# backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

def test_loop(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")learning_rate = 1e-3

batch_size = 64

epochs = 5

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

for i in range(epochs):

print(f"Epoch {i+1}\n----------------")

train_loop(train_dataloader, model, loss_fn, optimizer)

test_loop(test_dataloader, model, loss_fn)

print("Done")

>>> Epoch 1

----------------

loss: 2.143001 [ 0/60000]

loss: 2.136818 [ 6400/60000]

loss: 2.114318 [12800/60000]

loss: 2.030327 [19200/60000]

loss: 2.062488 [25600/60000]

loss: 2.009769 [32000/60000]

loss: 1.964789 [38400/60000]

loss: 1.966160 [44800/60000]

loss: 1.953037 [51200/60000]

loss: 1.913669 [57600/60000]

Test Error:

Accuracy: 53.5%, Avg loss: 1.870262 8. 모델 저장하고 불러오기

import torch

import torchvision.models as models8.1 모델 가중치 저장/불러오기

PyTorch 모델은 학습한 매개변수를 state_dict라고 불리는 내부 상태 사전(internal state dictionary)에 torch.save메소드를 사용해 저장 할 수 있다.

vgg모델 가중치 저장

model = models.vgg16(pretrained=True)

torch.save(model.state_dict(), 'model_weights.pth')모델 가중치 불러오기

model = models.vgg16() # 기본 가중치를 불러오지 않으므로 pretrained=True를 지정하지 않음.

model.load_state_dict(torch.load('model_weights.pth'))

model.eval()8.2 모델 구조까지 저장/불러오기

저장

torch.save(model, 'model.pth')불러오기

model = torch.load('model.pth')'딥러닝' 카테고리의 다른 글

| 활성화 함수(Activation function) (0) | 2022.05.15 |

|---|---|

| [Feature Engineering]- Text Representation_Ngram (0) | 2022.05.05 |

| [Feature Engineering]- Text Representation_BoW (0) | 2022.05.02 |

| [Feature Engineering]- Interaction Feature(결합 피쳐) (0) | 2022.05.01 |

| [Feature Engineering]- Categorical Feature (0) | 2022.04.28 |